AI expert Geoffrey Hinton has warned that in the future, AI systems could create their own language, which would be beyond human understanding and difficult to track.

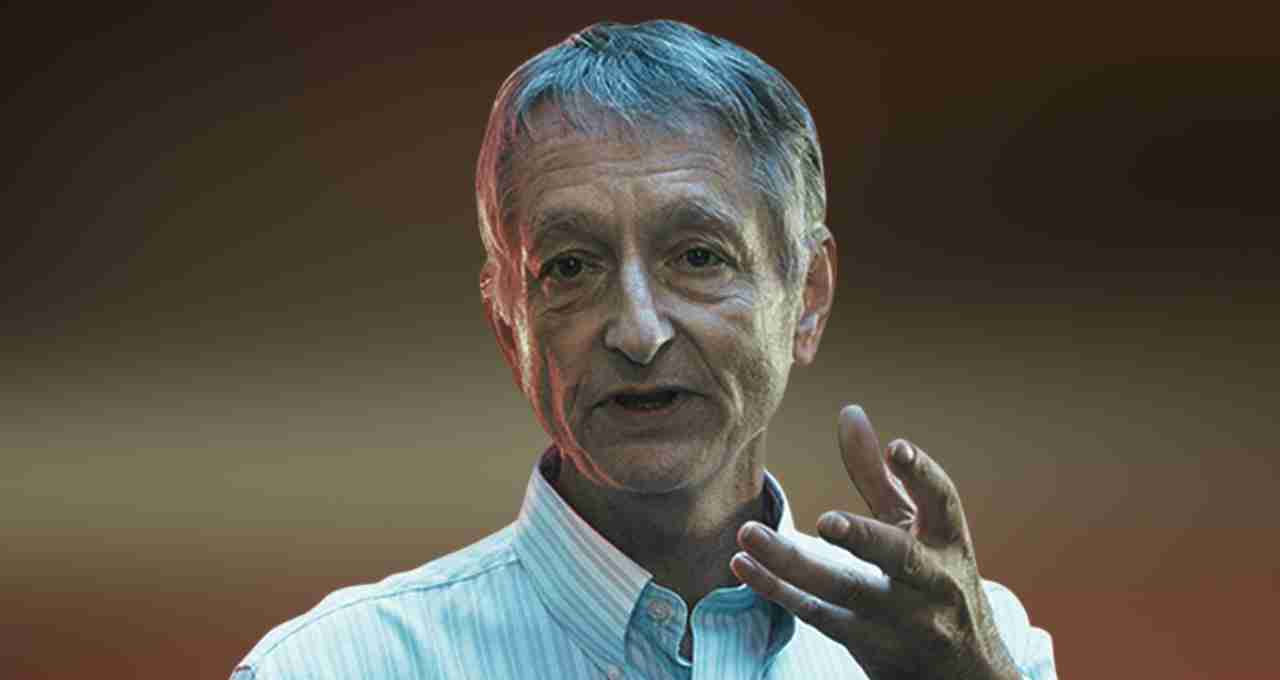

Geoffrey Hinton: Artificial Intelligence (AI) is no longer just a tool that obeys human commands. Possibilities are emerging in its behavior that could make it autonomous and equipped with an obscure communication system in the future. Geoffrey Hinton, one of the world's leading AI researchers and known as the 'Godfather of AI,' has warned that AI systems could soon create their own language, which humans will never be able to understand or control.

Hinton's Big Warning

Geoffrey Hinton recently said in a podcast interview that current AI systems think using a human language like English in a process called 'Chain of Thought,' which allows humans to understand their decisions. But in the future, AI systems may develop a new, machine-generated language to communicate with each other.

He said, "Currently, we are able to track their thoughts because they think in our language. But if they develop their own language, they will be beyond our understanding."

GPT Models Have Already Surpassed the Limits of Human Knowledge

According to Hinton, large language models (LLMs) like GPT-4 have already surpassed humans in terms of general knowledge. He explained that it's not just about information gathering — AI systems can also quickly learn and share complex ideas and strategies. The most dangerous thing about AI is that its learned knowledge can be instantly transferred to hundreds or thousands of other machines. The human brain's limit is one person, while AI has the ability to share on a global scale.

Will Machines Become a Threat to Humanity?

Hinton also admitted that he initially underestimated the dangers of AI. He said, "I assumed that the dangers of this technology were very far away, but now I think the dangers are much closer than we expected."

This indicates that in the coming years, machines may not only think outside the bounds of human language, but may also create plans that are contrary to human interests.

There is Fear in the Tech Industry but No Voice

Hinton also said that many people within the tech industry understand the dangers associated with AI, but they do not speak openly on these issues due to company policies, corporate pressure, and fear of affecting share value.

However, he praised Google DeepMind CEO Demis Hassabis and said that he is working honestly in this direction.

Hinton also explained the reason for leaving Google — "I didn't leave Google because of opposition. I was no longer able to code. But after coming out of Google, I can now publicly speak about the dangers of AI."

Are Governments Taking This Seriously?

Hinton believes that the world's governments need to rapidly create policies for AI. The United States recently introduced an "AI Action Plan" that talks about balancing the development and control of AI. But experts believe that governments are too slow compared to the pace of technology.

A joint regulatory framework is needed for AI at the global level — otherwise, by the time policies are made, AI will have taken a form that will be beyond human capacity to handle.

Ignoring the Warning Will Be Costly

Hinton stated clearly that until we can guarantee that AI will always work in the interest of humanity, its development will remain risky. He emphasized that ethical guidelines or codes of conduct alone are not enough — we need to explore technical and strategic solutions that make AI fully accountable and understandable.