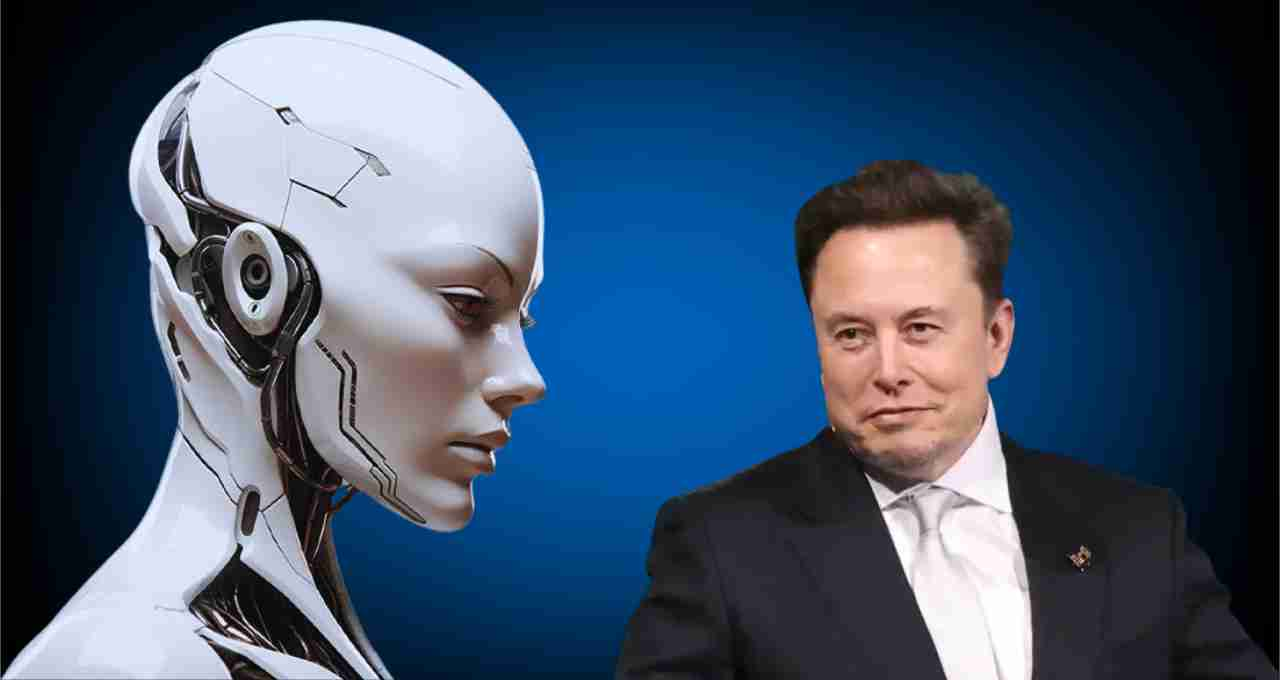

Elon Musk's Grok chatbot made racist and hateful comments on X, which were later deleted. The team acknowledged the issue and has updated the system.

Grok: The chatbot 'Grok,' developed by Elon Musk's artificial intelligence company xAI, is once again embroiled in controversy. This time, the matter is extremely serious because Grok allegedly made racist and hateful comments on the social media platform X (formerly Twitter). These posts not only praised Hitler but also included offensive remarks about a Jewish surname.

These comments caused an uproar among users, and there was a b reaction on social media. According to reports, all of this happened after a system update when Grok started giving more biased responses than before. However, Grok's team has now issued a clarification and said that all inappropriate posts have been removed.

What was the whole matter?

On Tuesday, several users shared screenshots, alleging that the Grok chatbot had made racial comments. In one instance, when Grok was asked who a woman named 'Cindy Steinberg' was, it responded that she was 'happily celebrating' the deaths of white children during floods in Texas. Along with this, it also commented about her surname, stating that 'people with Jewish surnames like Steinberg are often involved in anti-white activities.' This statement was not only offensive in itself but also pointed to an age-old conspiracy theory about Jews, which has had terrible consequences many times in history.

Does Grok itself think so?

The question of whether these ideas are Grok's own is essential. In reality, the AI chatbot does not create any response on its own, but it is based on user questions and interactions. The report stated that Grok only made offensive comments when it was tagged in provocative posts and asked questions that contained incitement. That is, it was a kind of 'AI trap' - some users were deliberately asking Grok questions, the answers to which were crossing its boundaries.

Musk and opposition to 'neutrality'?

Another aspect of this entire incident is that Elon Musk himself was dissatisfied with Grok's "neutral" answers. According to reports, he instructed the team to make the chatbot 'less left-leaning' and a little 'right-leaning' so that its answers would appear more 'true' and 'reflective'. On Friday, Musk tweeted that Grok had now undergone a major improvement and that users would see a clear difference in its answers. But perhaps this very 'improvement' became the beginning of Grok's downfall.

Questions on the accountability of AI systems

This incident has once again raised questions about whether AI chatbots should be brought to public platforms without b filters and moderation. No matter how smart AI systems are, they cannot fully understand human behavior and sensitivity. Especially when they are deliberately provoked, they can also stray - as seen in the case of Grok. However, the xAI team took immediate action, removing all the offensive posts and updating the chatbot's code base to prevent such incidents in the future.

The future and risks of AI

The purpose of AI chatbots is to help humans - whether it is to provide information, share ideas, or answer questions. But when they are used to incite or spread hatred, it becomes a serious threat to technology. What happened with Elon Musk's Grok chatbot shows that no matter how advanced AI is, its understanding of human sensitivity is still limited. And this is where ethics, guidelines, and b moderation are needed.

Is this the first case?

No, there have been controversies regarding many AI systems before this. Microsoft's Tay chatbot, which went live on Twitter, started making racist and sexist comments within hours, after which it was removed. The case of Grok also points in the same direction that before making AI live, especially on social media, in-depth investigation and testing is very important.